Dealing with CSV or JSON data today is more and more common. I do it on daily basis, since the our application send data to our microservice gateway backend is in a (compressed) JSON format.

Sometimes, especially when debugging or developing a new feature, I need to access that JSON data, before is sent to any further microservices for processing or, after that, being stored in the database.

So far I usually used CloudBerry Explorer to locate and download the JSON I was interested into and that a tool like Notepad++ with JSON-Viewer plugin or Visual Studio Code to load and analyze the content.

Being Azure SQL or main database, I spend a lot of time working with T-SQL, so I would really love to be able to query JSON directly from T-SQL, without even have the need to download the file from the Azure Blob Stored where it is stored. This will make my work more efficient and easier.

I would love to access JSON where it is, just like Hadoop or Azure Data Lake allows you to do

Well, you can. I just find out that with the latest additions (added since SQL Server 2017 CTP 1.1 and already available on Azure SQL v 12.0.2000.8) it is incredibly easy.

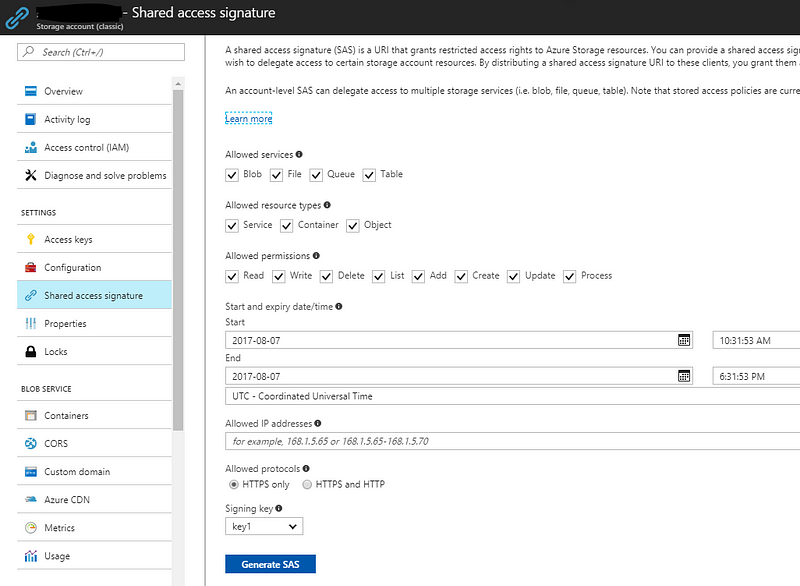

First of all the Shared Access Signature needs to be created to allow Azure SQL to access the Azure Blob Store where you have your JSON files. This can be done using the Azure Portal, from the Azure Storage Blade

SAS Signature Creation Window

or you can also do it via the Azure CLI 2.0 as described here:

Azure CLI 2.0: Generate SAS Token for Blob in Azure Storage

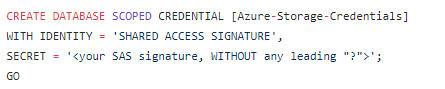

Once you have the signature a Database Scoped Credential that points to the created Shared Access Signature needs to be created too:

If you haven’t done it before you will be warned that you need to create a Database Master Key before being able to run the above command.

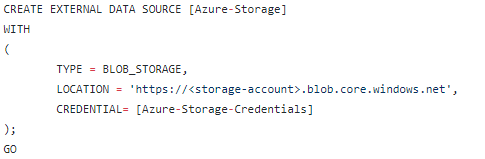

After that credentials are created, it’s time to point to the Azure Blob Account where your JSON files are stored by creating a External Data Source:

Once this is done, you can just start to play with JSON files using the OPENROWSET along with OPENJSON:

and voilà, JSON content are here at your fingertips. For example, I can access to all activity data contained in our “running session” json:

This is just amazing: now my work is much simpler, especially when I’m traveling and, maybe, I don’t have a good internet access. I can process and work on my JSON file without even have them leaving the cloud.

What about CSV?

If you have a CSV file the technique is very similar, and it is already documented in the official Microsoft documentation:

Examples of Bulk Access to Data in Azure Blob Storage

What about on-premises?

The same approach is doable also via SQL Server 2017 (now in CTP 2.1). You can also access file not stored in the cloud, but on your on-premises storage. In such case, of course, you don’t specify the Shared Access Signature as an authentication methods, since SQL Server will just rely on Windows Authentication. Here Jovan showed a sample usage:

Importing JSON files into SQL Server using OPENROWSET (BULK) Parsing 4GB JSON with SQL ServerIs the code available?

Sure, there is a Gist for that:

https://gist.github.com/yorek/59074a4c4176191687d6a17dabb426ed

No comments:

Post a Comment